Cracking the Code: How the CAP Theorem Influences Website Speed and Reliability

Have you wondered why an app you frequently use, like bank apps, college websites, and ticket reservations, is often down or slow, but websites with much more traffic are always fast and ready to use?

This could happen for different reasons, such as the app not being optimized enough, the architecture not being proper, and many more. But sometimes, it is not because the developers don't want to make it more resilient to peak traffic. Occasionally, some apps take more work to scale.

All apps cost the same to scale them up, right? No, it depends on many things, but one to consider is the CAP theorem.

What the hell is the CAP theorem?

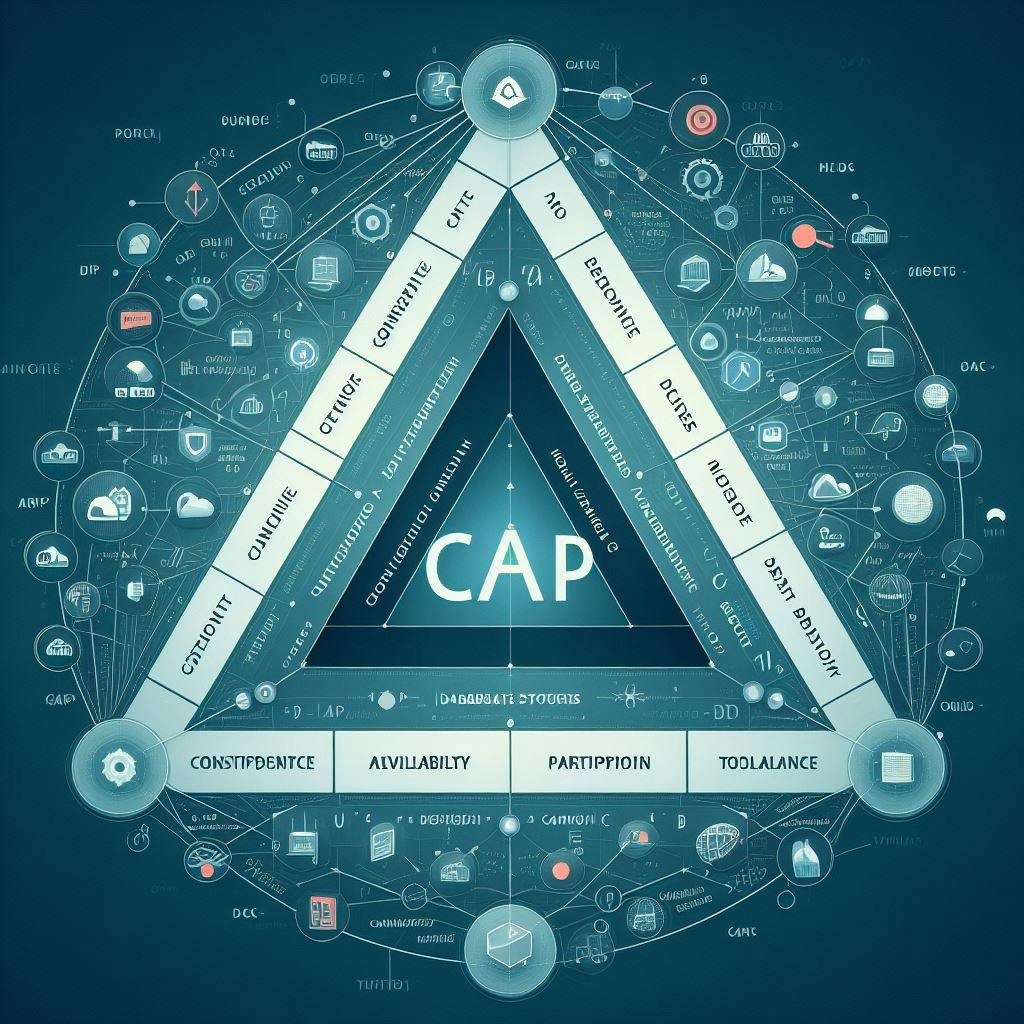

First of all, let's talk about distributed systems. When there is a distributed system, we usually want this system to have excellent availability, data consistency, and resiliency to partition. These characteristics are what CAP represents: Consistency, Availability Partition.

The theorem tells us that consistency and availability are complicated when a partition occurs in this system, so we must decide which one to prioritize.

Why can't we have the three of them?

These characteristics are based on the system's actions once a partition is detected. And we have two options.

Block some actions to ensure data consistency

Allow all actions, but lose data consistency

Opting for availability over consistency doesn't entail a complete system shutdown; instead, it refers to maintaining transactional capabilities even during partition-related issues.

Prioritizing consistency involves blocking transactions for affected parties while ensuring the system remains operational.

Examples

Consider a scenario where you're part of a team developing a social media application. In this context, sacrificing a 'like' or duplicating like counts due to partitioning may be acceptable. While not ideal, maintaining availability is preferable to preventing users from interacting with the platform due to partition-related issues.

Conversely, in the case of a banking application, ensuring that only users with sufficient funds can withdraw is paramount. Consequently, the system would block transactions during partitioning events to maintain data integrity.

How does this impact the performance of my software?

Scaling up a system becomes more challenging when data consistency is critical. However, does this imply it's impossible to scale such systems?

No, if that was the case, bank apps couldn't support the amount of users they have; it is just more challenging to do so

Mitigating partitioning issues

Various strategies can enhance system availability. Taking the banking example:

The system can restrict the withdrawal amounts permitted while addressing partitioning issues.

This process typically unfolds as follows:

Optimal state

Partition detection

Entering partition mode: During this phase, the application could limit withdrawal options for affected users. Additionally, the system must determine which transactions to prioritize, often utilizing logs, vectors, or alternative strategies.

Initiating partition recovery: Ensuring all data is accurately stored and implementing processes to rectify partitioning issues.

Why do we have to do all this to have more availability?

Because sometimes the cost to deny requests and have our consistency is higher than prioritizing the availability of our systems. So, we create availability with some additional characteristics to recover from it in the best possible way.